Oasis Open World Real-Time AI Video Model FAQ

Quick Navigation:

- How does Oasis handle real-time performance?

- What GPUs are compatible with running Oasis?

- What resolution and frame rate can Oasis achieve?

- What makes the Sohu chip significant for Oasis?

- How does Oasis differ from other text-to-video models?

- What challenges did the development of Oasis face?

- What is the inference time per frame for Oasis?

- What kind of optimizations were necessary for Oasis to function?

- What future improvements are planned for Oasis?

- What are the potential applications of Oasis beyond gaming?

- What limitations does Oasis currently have?

- What makes transformer-based models suitable for video generation?

- How does diffusion training contribute to Oasis?

- What benefits do temporal attention layers provide in Oasis?

- What is the role of ViT VAE in Oasis?

- What is the significance of Decart’s proprietary inference platform?

- What hardware technologies support Oasis’ performance?

- How does Oasis plan to overcome current limitations?

- What is the long-term vision for Oasis?

- Can you play Oasis’s Minecraft online?

How does Oasis handle real-time performance?

To achieve real-time performance, Oasis relies on Decart’s proprietary inference framework, which optimizes GPU utilization and reduces latency through advanced communication primitives and kernel acceleration.

What GPUs are compatible with running Oasis?

Currently, Oasis is optimized for NVIDIA H100 Tensor Core GPUs, but it is also built to support the upcoming Sohu chip by Etched for enhanced efficiency and higher resolutions.

What resolution and frame rate can Oasis achieve?

Oasis can currently run at 360p resolution at 20 frames per second (fps) on NVIDIA H100 GPUs. With Sohu, the model can support up to 4K resolution at comparable performance levels.

What makes the Sohu chip significant for Oasis?

The Sohu chip is designed to run transformer-based models like Oasis more efficiently. At the same price and power consumption as an NVIDIA H100 GPU, Sohu can support 10 times more users while enabling higher resolution output.

How does Oasis differ from other text-to-video models?

Oasis stands out by offering real-time, interactive gameplay generation. While other models like Sora, Mochi-1, and Runway create videos post-hoc and can take several seconds per frame, Oasis delivers outputs immediately in response to user input.

(Credit: Decart)

What challenges did the development of Oasis face?

Key challenges included achieving real-time inference with minimal latency and optimizing GPU utilization. Traditional kernels and techniques for LLMs were not applicable, so proprietary solutions were developed for optimal performance.

What is the inference time per frame for Oasis?

Oasis achieves a 47ms inference time per frame and 150ms per training iteration, allowing for smooth, real-time gameplay.

What kind of optimizations were necessary for Oasis to function?

Decart’s proprietary infrastructure accelerated basic PyTorch primitives and combined advanced operations for high GPU utilization. Optimized communication protocols were used to enhance server-wide data handling.

What future improvements are planned for Oasis?

Future enhancements include better handling of hazy initial outputs, improved memory for long-term detail recall, and more robust results when starting from out-of-distribution images.

What are the potential applications of Oasis beyond gaming?

Oasis’s underlying technology could revolutionize interactive experiences across various entertainment platforms by generating user-specific content in real-time and supporting new forms of AI-driven media.

What limitations does Oasis currently have?

Oasis may produce unclear results when initialized with images outside its learned distribution, and its memory capabilities can be limited in recalling long sequences. These aspects are areas for ongoing research and development.

What makes transformer-based models suitable for video generation?

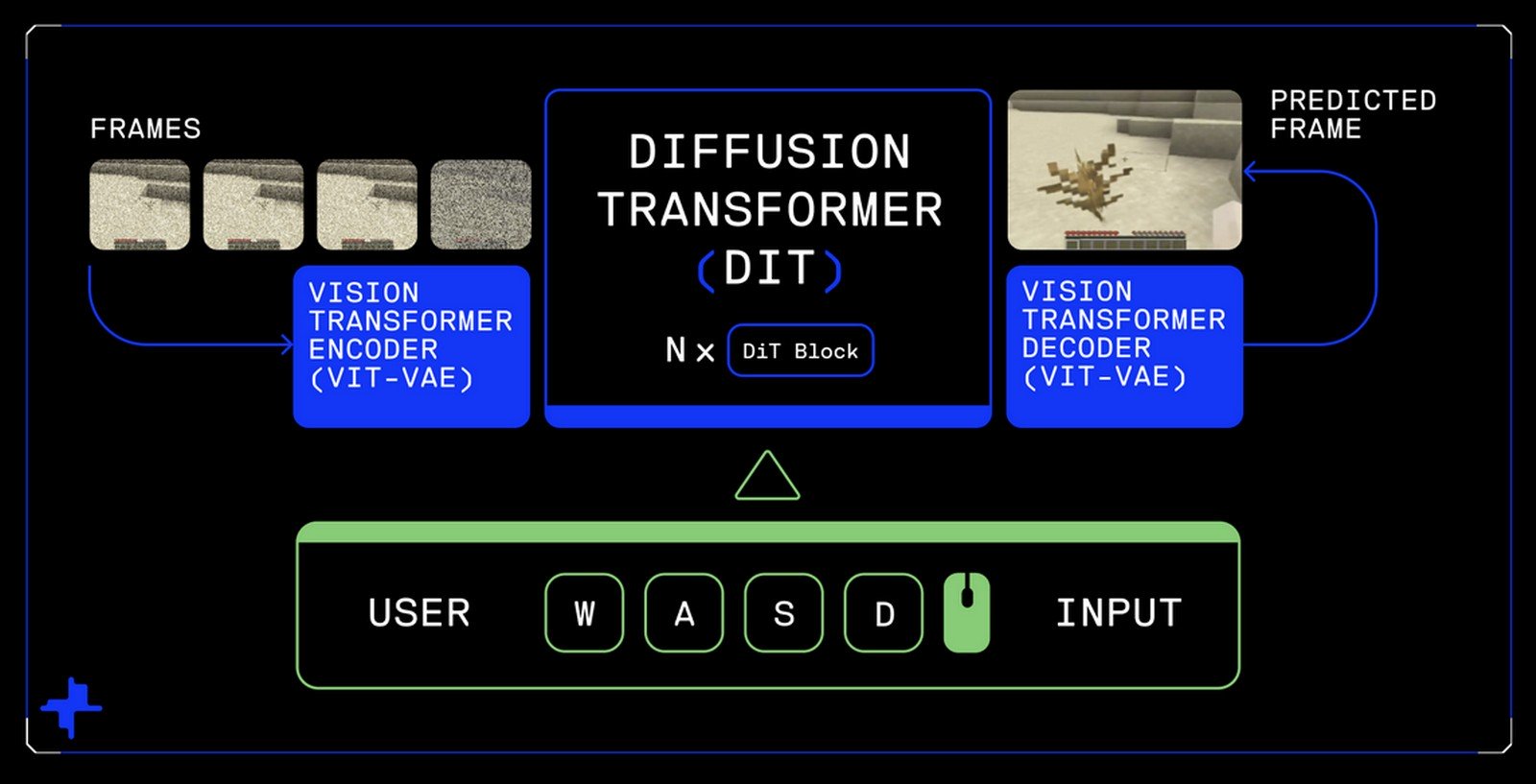

Transformers excel at handling sequential data and can integrate both spatial and temporal attention. This makes them well-suited for generating coherent video frames that depend on prior outputs and user interactions.

How does diffusion training contribute to Oasis?

Diffusion training allows the model to learn by reversing a process of added Gaussian noise, which helps in generating realistic video frames by iteratively refining noisy inputs.

What benefits do temporal attention layers provide in Oasis?

Temporal attention layers allow Oasis to maintain context across video frames, ensuring that the gameplay remains coherent and responsive to user actions.

What is the role of ViT VAE in Oasis?

ViT VAE compresses image data into a latent space, enabling more efficient processing during the diffusion phase and allowing the model to focus on high-level features.

What is the significance of Decart’s proprietary inference platform?

Decart’s platform plays a critical role in enabling real-time inference by optimizing GPU workloads and minimizing latency through custom kernel and communication strategies.

What hardware technologies support Oasis’ performance?

Oasis leverages NVIDIA H100 Tensor Core GPUs, optimized with NVLink, PCIe Gen 5, and NUMA technologies for enhanced communication and data transfer, reducing bottlenecks and ensuring smooth real-time output.

How does Oasis plan to overcome current limitations?

The development team is exploring model scaling, data augmentation, and further optimizations in hardware and software to address the model’s current challenges and latency constraints.

What is the long-term vision for Oasis?

The long-term vision involves scaling the technology for more complex simulations and broader applications, including non-gaming interactive platforms that use AI-driven real-time video generation.

Can you play Oasis’s Minecraft online?

Yes, you can experience the game at Decart’s website (LINK: https://oasis.decart.ai/welcome). You can also export the gameplay video as an MP4 file.

Comments powered by CComment